AI Liability and Black Box Dilemmas Surge in Courts

Introduction

The rise of artificial intelligence (AI) in legal, corporate, and criminal contexts has prompted a surge in lawsuits questioning accountability for errors generated by automated systems. Courts worldwide are grappling with “black box” dilemmas, where AI’s decision-making processes are opaque, raising significant due process and liability concerns.

From criminal risk assessments to AI-assisted legal filings, these cases challenge traditional frameworks of responsibility, transparency, and regulatory oversight, placing tech giants like Apple and Microsoft under intense global scrutiny.

AI Errors in Criminal Risk Assessments

A growing number of cases involve AI algorithms used in criminal justice, particularly in risk assessment tools employed for sentencing, bail, or parole decisions. Critics argue that opaque models may:

- Produce biased or inconsistent recommendations.

- Violate due process, as defendants cannot meaningfully challenge algorithmic reasoning.

- Amplify systemic inequities by relying on historical data that embeds societal bias.

Courts are increasingly examining whether algorithmic opacity constitutes a legal liability, and whether developers or agencies using these tools can be held accountable for erroneous or discriminatory outcomes.

Legal Sector Challenges: AI-Generated Errors

In parallel, the legal profession is facing scrutiny over AI-generated content, including:

- Fake citations or misattributed references in filings produced by AI tools.

- Lawyers’ liability for unverified AI output, which could mislead courts or clients.

- Professional sanctions for negligence in verifying AI assistance.

These cases highlight the emerging tension between efficiency and accountability in legal practice, forcing law firms to adopt verification protocols and ethical guidelines for AI usage.

Tech Giants Under Global Scrutiny

Major technology companies are increasingly targeted in lawsuits related to AI liability:

- Apple faces challenges over training data used for AI systems, with allegations of copyright infringement and improper dataset sourcing.

- Microsoft is implicated in antitrust concerns regarding its investments and partnerships with OpenAI, particularly around competitive advantage in AI deployment.

International regulators are also monitoring these cases, considering cross-border implications for AI governance, intellectual property, and market fairness.

Black Box Dilemma and Accountability

The “black box” problem refers to AI systems whose decision-making logic is incomprehensible to users, regulators, or even developers. This opacity complicates liability determination:

- Who is responsible when AI produces harmful or illegal outputs?

- How can courts verify algorithmic fairness and accuracy?

- What constitutes reasonable human oversight in AI deployment?

Legal scholars argue for explainable AI (XAI) standards, requiring models to produce transparent, interpretable outputs that support judicial review and regulatory compliance.

Regulatory and Policy Implications

The spike in AI liability cases has prompted discussions on legislative frameworks for AI accountability, including:

- Mandatory audits of high-stakes AI systems in sectors like finance, healthcare, and law enforcement.

- Clear attribution of responsibility between developers, deployers, and end-users.

- Guidelines for acceptable risk and recourse mechanisms when AI fails.

Policymakers are weighing whether existing tort and contract law is sufficient or if new AI-specific legal statutes are required to address these emerging challenges.

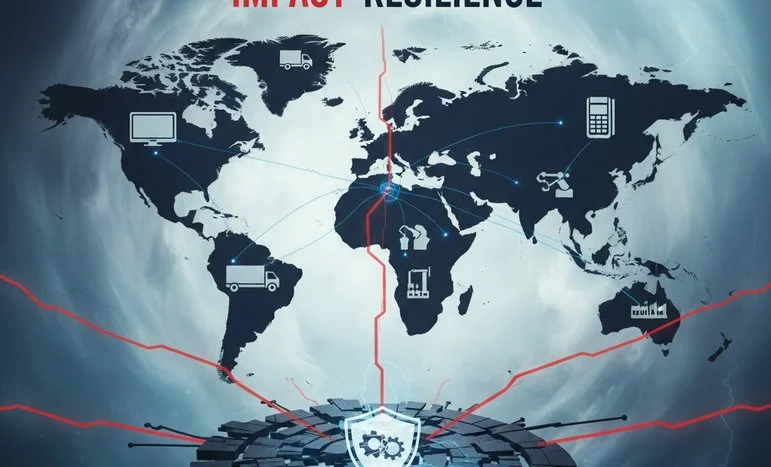

The Global Impact on AI Development

As lawsuits proliferate, companies are reassessing risk exposure, compliance procedures, and investment strategies. Some trends include:

- Increased emphasis on AI explainability and auditability in product development.

- Adoption of ethical AI frameworks aligned with international standards.

- Strategic partnerships with legal and regulatory advisors to mitigate litigation risk.

This heightened scrutiny may slow some AI deployment but is expected to improve long-term accountability and public trust.

The rise of AI liability and black box dilemmas reflects a pivotal moment in legal and technological history. Courts are being asked to navigate complex questions of transparency, accountability, and fairness as AI integrates deeper into critical decision-making processes.

For tech firms, lawyers, and regulators alike, the challenge is balancing innovation with responsibility, ensuring that AI enhances societal outcomes without undermining legal rights or due process.

We appreciate that not everyone can afford to pay for Views right now. That’s why we choose to keep our journalism open for everyone. If this is you, please continue to read for free.

But if you can, can we count on your support at this perilous time? Here are three good reasons to make the choice to fund us today.

1. Our quality, investigative journalism is a scrutinising force.

2. We are independent and have no billionaire owner controlling what we do, so your money directly powers our reporting.

3. It doesn’t cost much, and takes less time than it took to read this message.

Choose to support open, independent journalism on a monthly basis. Thank you.